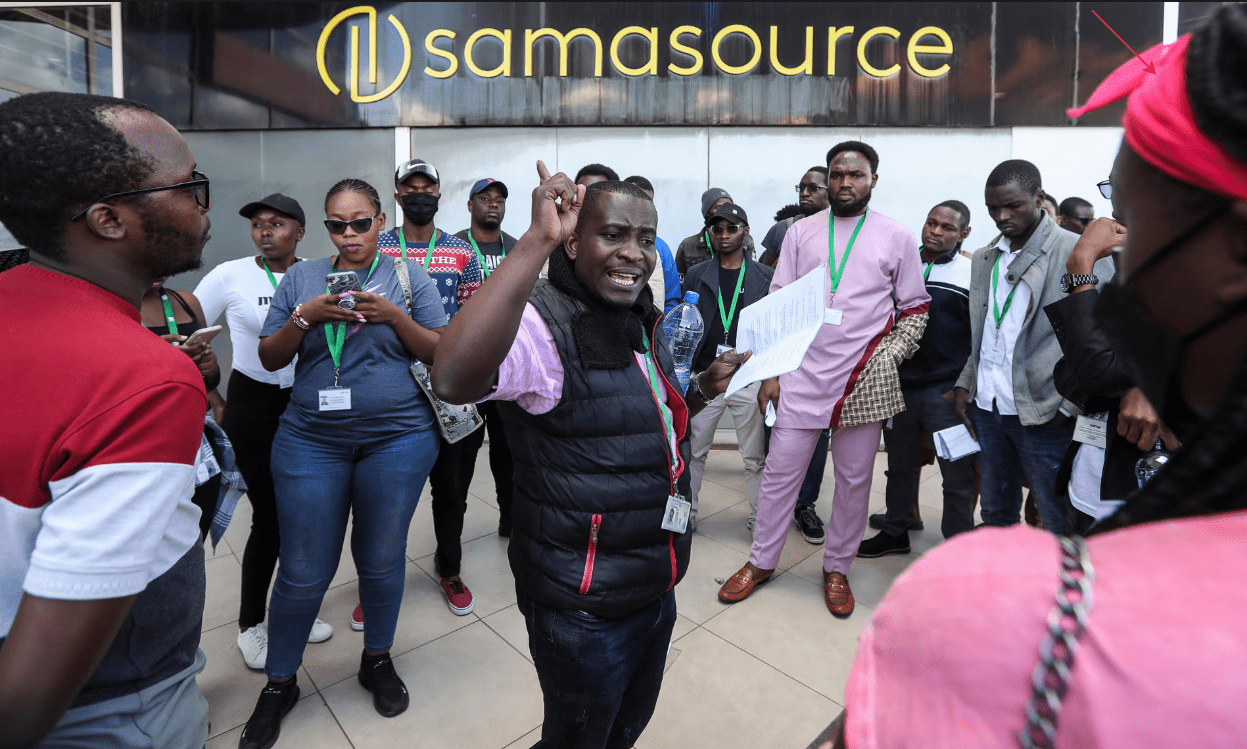

In 2023, 186 Facebook moderators in Kenya filed lawsuits against parent company Meta and outsourcing company Samasource Kenya alleging intentional infliction of mental harm, unfair labor practices, modern slavery, and wrongful termination. From the case files, it was revealed that over 140 former moderators on the list suffered from severe post-traumatic stress disorder.

Meta challenged those claims. But in September, the appeals court dismissed Meta’s petition challenging the authority of the Kenyan courts. The case is going to be heard on February 26, 2025.

Dr. Ian Kanyanya, head of mental health services at the Kenyatta National Hospital in Nairobi, found in the lawsuit that former Facebook moderators had:

- Post Traumatic Stress Disorder (PTSD)

- Generalized anxiety disorder (GAD)

- Major Depressive Disorder (MDD)

The moderators were given a steady stream of images to check, eight to 10 hours a day in a cold warehouse-like room, under bright lights and with minute-by-minute monitoring of their work activity. They verified posts from 2019 to 2023, originating from Africa in their native languages, however, they were paid eight times less than their counterparts in the U.S., the lawsuit documents said.

According to the filing, Kanyanya concluded that the primary cause of the 144 people’s mental health problems was their work as Facebook content moderators because they were “exposed daily to dangerous graphic content that included videos of gruesome murders, self-mutilation, suicides, suicide attempts, sexual assault, explicit sexual content, physical and sexual abuse of children, horrific violent acts and more.”

All of that led to moderators abusing alcohol, sleeping pills, and drugs. Some reported marital breakdowns and loss of contact with their families. Some felt that they were being watched and that if they had returned home they would be followed and killed. Four suffered from trypophobia.

Martha Dark, founder and one of the executive directors of Foxglove, a British non-profit organization that supported the trial, said: “The evidence is indisputable: moderating Facebook is dangerous work that inflicts lifelong PTSD on almost everyone who moderates it.”

Moderation and the related task of labeling content is often a hidden part of the tech boom. Meta and Samasource declined to comment on the claims because of the litigation.

- More details on the case on Google News.