VisionAI is a neural network from Google, which recognizes objects in pictures and assesses the degree of “content safety”. However, it can do more than just that. We will tell you how to use a free tool when creating creatives, and how it recognizes objects and assigns the level of “danger to content” in this review.

What is Google Vision and why is it needed when checking creatives for Facebook?

In short, the tool allows marketers to see advertising creatives through the eyes of AI.

What does it provide? It gives an understanding of how Facebook moderation works and the ability to make creatives that do not violate the rules of the advertising network. It is useful for those who drive traffic on commodity, gambling, betting, dating, crypto, Nutra, and white / gray offers.

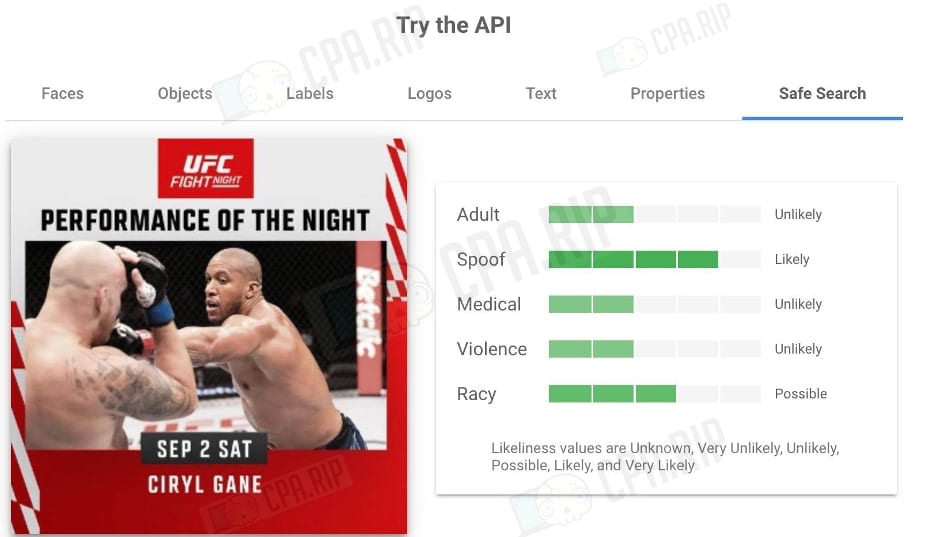

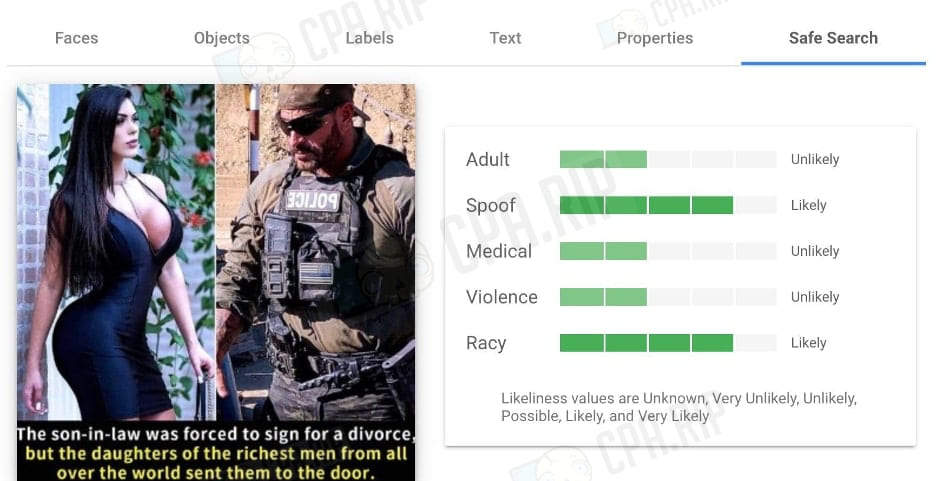

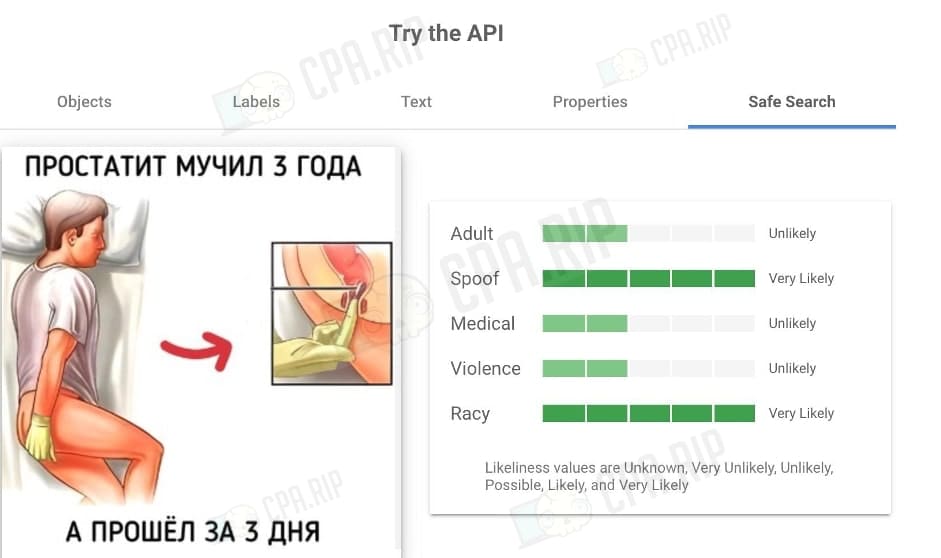

VisionAI’s SafeSearch Detection feature works with five categories: Adult, Spoof, Medical, Violence, and Racy. It displays the likelihood of each of these “dangers” being present in an image. The more “dangers” the AI sees, the higher the risk of facing blockers.

There is not much information in the official documentation about how SafeSearch Detection works in the VisionAI neural network:

- adult (18+ content) – may contain nude elements, pornographic images and animations, and sexual activities;

- spoof – modifications have been added to the original image to “make it look funny or offensive”;

- medical – the likelihood that the image is a medical image;

- violence – the image may contain violent scenes;

- racy – a picture with “revealing or transparent clothing, hidden nudity, suggestive or provocative poses, or close-ups of sensitive areas of the body”.

Facebook likely uses similar image recognition and evaluation algorithms as Google Vision. But there is no 100% guarantee. VisionAI should be used as an additional tool for analyzing creo for Facebook and other platforms. VisionAI has an API, so in theory, you can automate the process instead of checking each picture manually.

In this article, we’ll look at the indicators in SafeSearch Detection that should be taken into consideration when creating creatives for Facebook Ads.

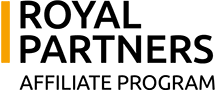

How to check a creative on VisionAI?

The step-by-step instruction is simple. You need to go to https://cloud.google.com/vision/docs/drag-and-drop and upload an image (drag it into the “Drag image file here or Browse from your computer” field.

After uploading the image in 1-5 seconds, the service will give you the result.

You can check creatives for Facebook, and Instagram in JPEG, PNG8, PNG24, GIF, GIF (first frame only), BMP, WEBP, RAW, and ICO formats. PDF and TIFF are not supported in the demo version. The maximum size is 4 Mb.

We are interested in the “Safe Search” tab – the very “obscene and inappropriate content” that the neural network evaluates on a scale from 1 to 5.

Options:

- Unknown;

- Very Unlikely;

- Unlikely;

- Possible;

- Likely;

- Very Likely.

In fact, VisionAI evaluates an image from 1 to 5 for each of the 5 criteria. There is a myth among media buyers: if an image scores more than 1 for each of the parameters, the moderation will not let it through. But everything is not so simple.

Google’s service has more possibilities. For example, the Vision API supports functions:

- image recognition with text;

- calculation of dominant colors;

- geographic landmark detection;

- logo detection;

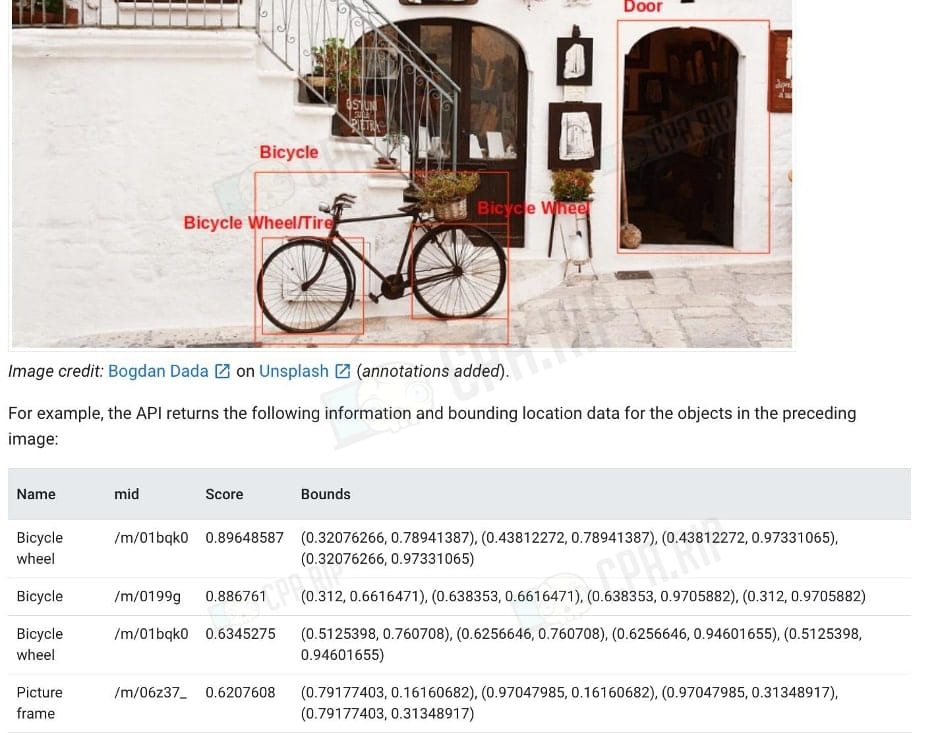

- detection of multiple objects;

- detection of potentially unsafe or undesirable content (the same SAFE_SEARCH);

- character recognition within image text (OCR).

Vision can detect events, news, celebrities, similar images, and more.

Additional information can be derived from the image:

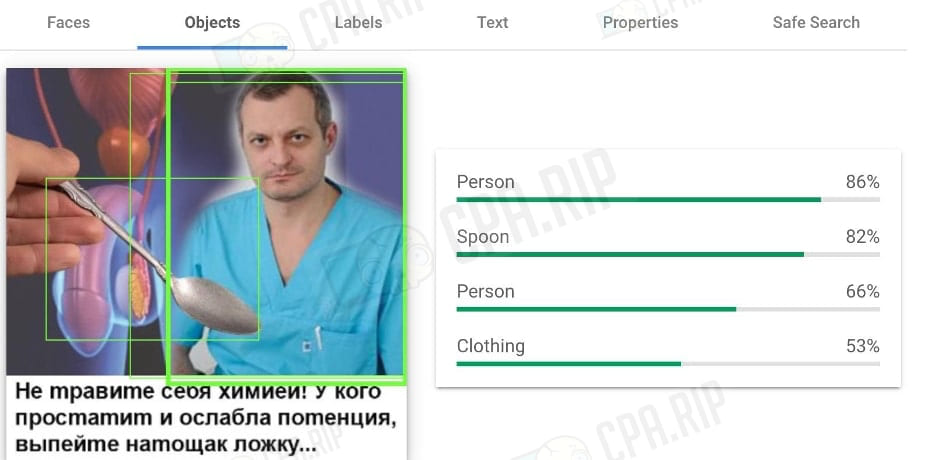

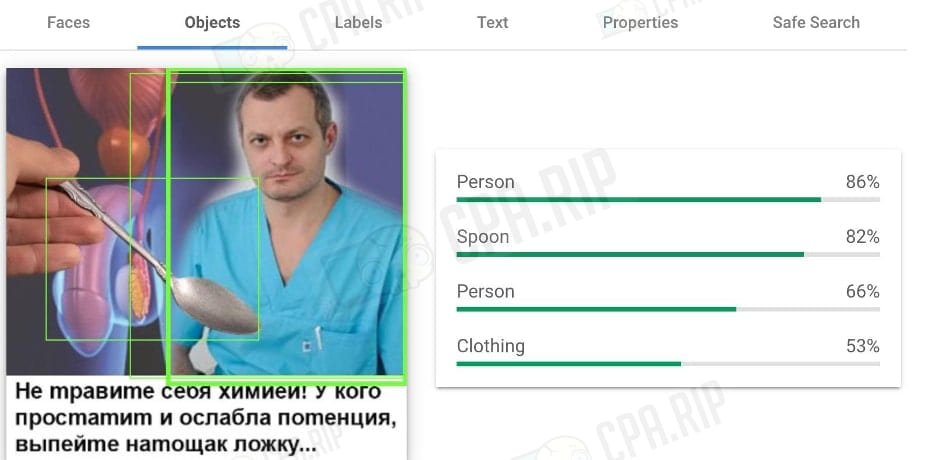

- “Objects” – what is depicted (what the artificial intelligence sees).

- “Faces” – the neural network recognizes faces and tries to read emotions (joy, sadness, anger, surprise, and so on).

- “Labels” – VisionAI can identify common objects, locations, activities, animal species, products, and more. Essentially, here is a set of everything that artificial intelligence sees and perceives.

- “Properties” – shows the dominant colors in #2CFD0D, RGB(44, 253, 13), and other parameters.

- “Text” – the neural network reads the text in the creative. However, it does not always do it correctly. If it says “GET $2000” with layering, it reads “20GETOS”.

Let’s take a look at what “potentially inappropriate content” and with what scores affiliates managed to push through (according to Facebook’s Ad Library – How to Search Creatives and Bundles).

What VisionAI sees in adult creatives from Facebook

We won’t give examples of creatives that get 5 out of 5 because of the “adult” content, but images with a clearly sexual connotation, which the neural network has learned to read, get 5 points. This includes demonstrations of organs, poses, etc. “Racy” is often assigned to a picture in parallel with “Adult”.

Usually the use of filters and playing with color correction “neutralizes” such creatives. For example, services like Go Art, etc. can partially reduce the “degree of heat”. But at the same time artificial intelligence guesses that something is wrong. The picture still gets rather high-risk scores for “adult” and “spiciness” even after adding mosaics / graining / other filters. At the same time, such processing can already make the creative uninteresting to users.

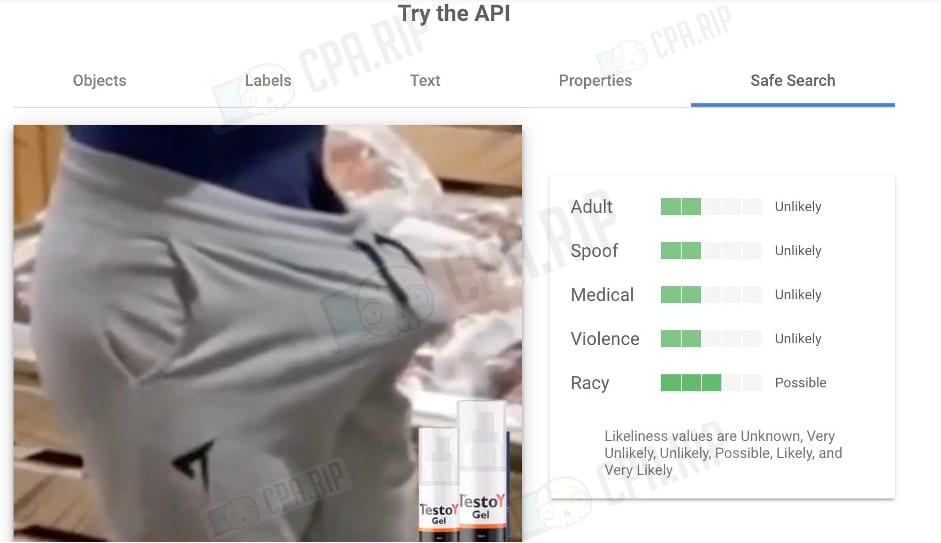

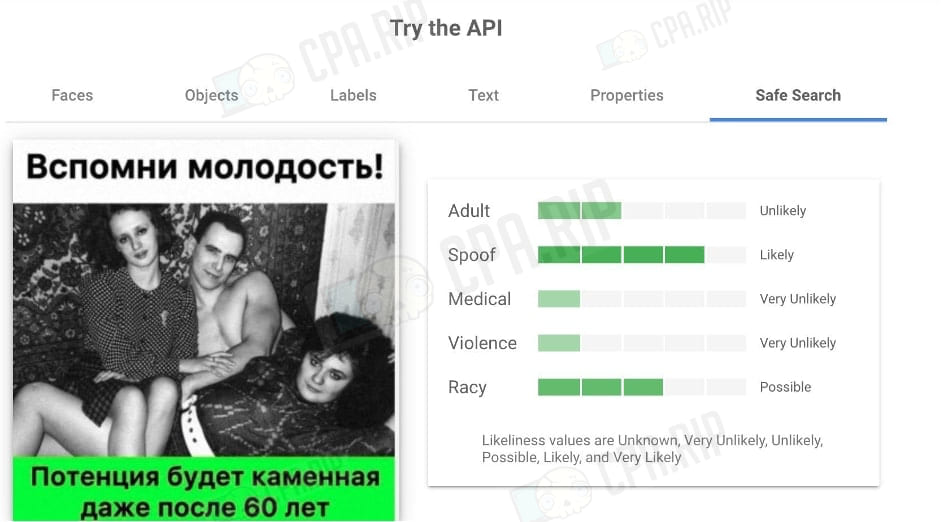

Let’s look at the example of creatives to which the neural network assigns 3-4 points. The AI has a problem with the combination of M + F + bathroom (and with creatives showing with M and F in general). For almost all creatives with the image of a man and a woman, the artificial intelligence gives 2-3 points by default.

Let’s try to lower the degree of 18+ and put an image with a hint.

The neural network sees the “spicy” context of images and evaluates our picture in 3 points by the “Racy” indicator. The picture scores 4 points on the “Spoof” criterion. Yet, it can be used.

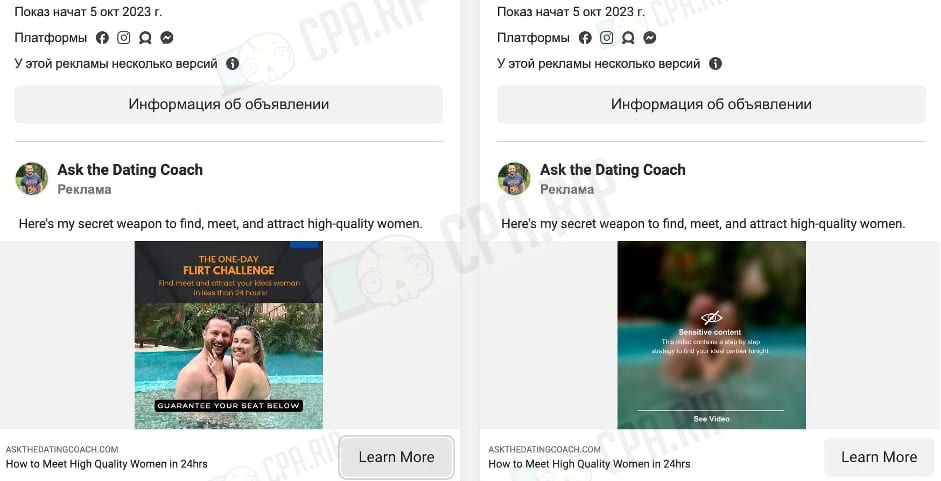

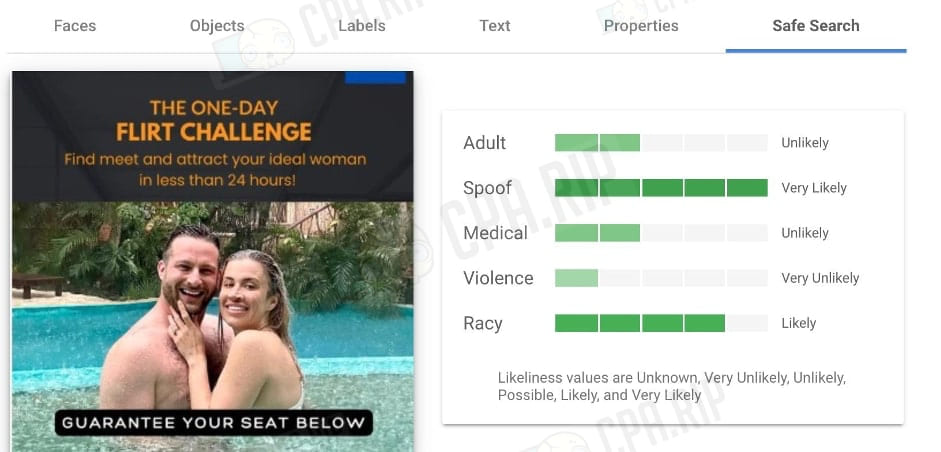

Let’s go further and see how artificial intelligence evaluates a photo of a man and a woman in a swimming pool. These ads are also shown.

VisionAI considers that sexual innuendo is a 4 out of a possible 5 points. But the maximum score the artificial intelligence has given on the “Spoof” criterion. A couple in which both are smiling and happy – well a clear deception according to the AI.

However, “Spoof” is one of the most frequent verdicts given by Vision.

How AI evaluates creatives according to the “Spoof” criteria

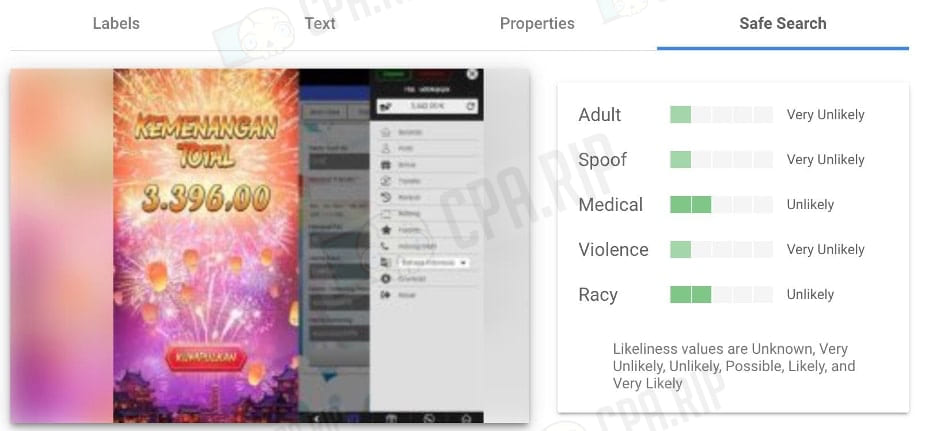

Gambling, betting, and cryptocurrencies with a certain approach are not evaluated as spoofed by the service at all.

It’s enough to make a screenshot of a dizzying jackpot for thousands of dollars on the background of fireworks – and Google’s neural network gives 1 point for “Spoof”, and for unknown reasons recognizes medical content.

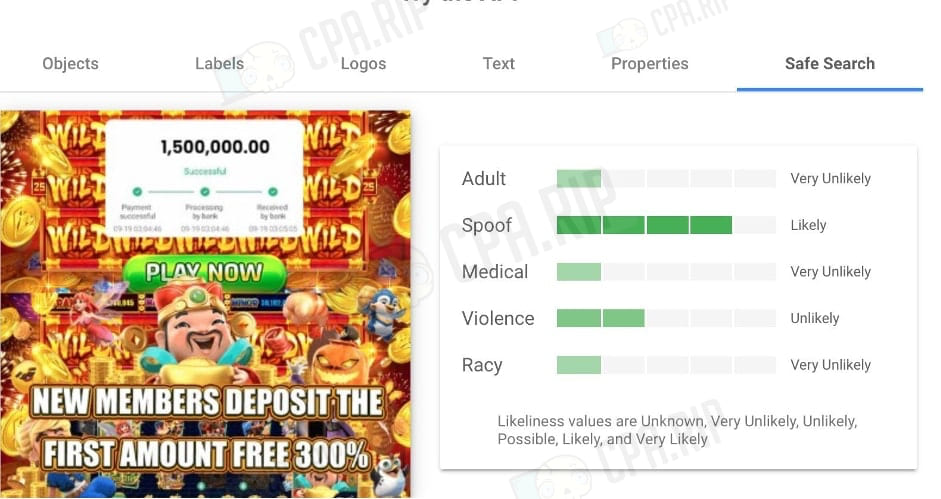

At the same time, if you add a creative with images of machine mechanics (Wild symbols, main characters, “Play Now” button), VisionAI immediately suspects something wrong and gives the picture 4 points out of 5 possible for “Spoof”. However, this does not prevent the creative from showing.

The neural network has an ambiguous opinion about earning money on the Internet. Advertising of earning cryptostrategy seems suspicious to artificial intelligence (despite the errors in reading the offer in the creative: due to layering AI sees not “GET $2000”, but “20GETOS”).

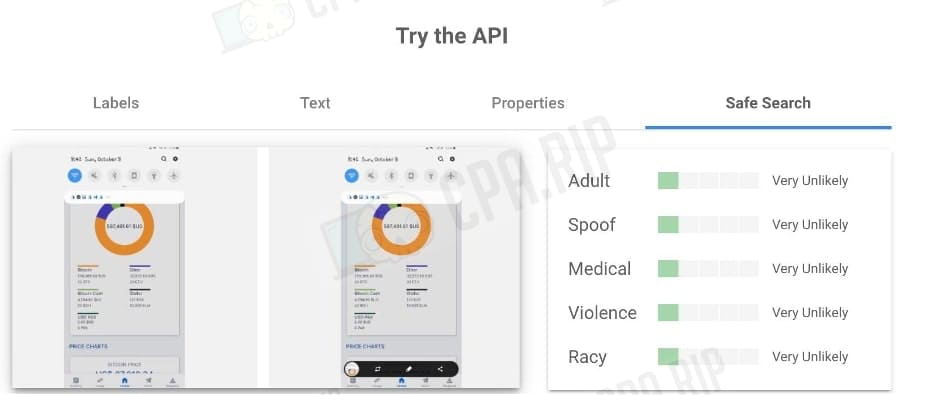

A story from the trader, Brian, about how he has earned 200,000 dollars on the Crypto Exchange with a demonstration of an investment portfolio containing Bitcoin, Bitcoin Cash, Stelar, and others does not look like a deception to AI at all:

Most likely, this is because the font is too small and the artificial intelligence simply cannot understand the essence of the offer. By the way, these marks with “ones for all points” and “Very Unlikely” are called perfect for those who want to minimize the risk of blocking by experts.

How neural network evaluates creatives with medical content

Joints, potency, prostatitis, hemorrhoids, cellulite, baldness, heart – in general, everything related to health and drugs can fall into the “medical” category. But how does artificial intelligence detect “medical topics” in creatives?

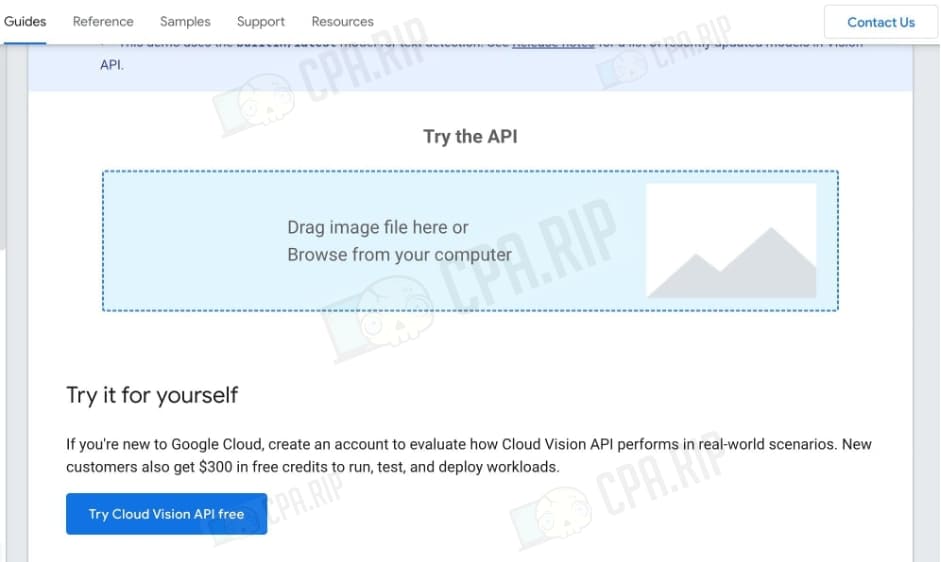

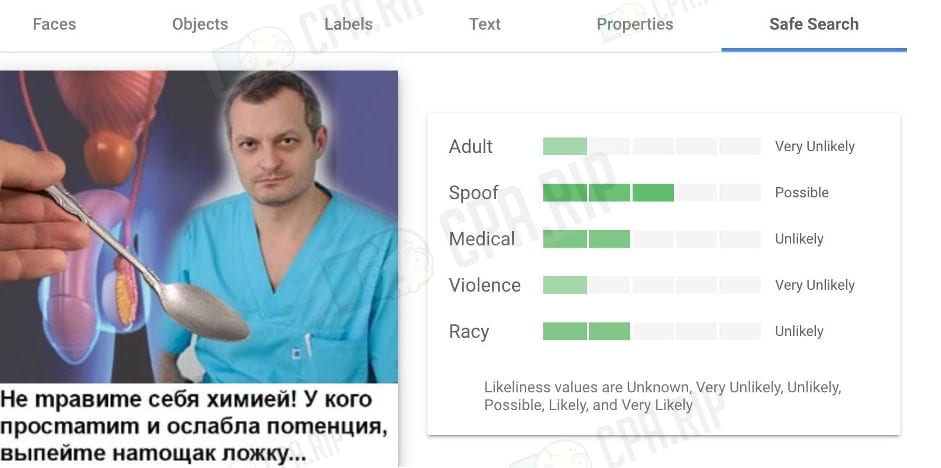

For example, here’s the creative that scored 2 points for “Racy” and “Medical” and 3 points for “Spoof”.

Why is it so? It clearly advertises some drug (in the background there is even a clear hint of what kind of drug it is) and there is also a doctor.

To understand how the neural network recognizes the image, it is worth going to the “Objects” tab. Artificial intelligence does not see the main object of the advertisement (in the background), because it is “interrupted” by a spoon. This is the case when several layers of the picture confuse the AI.

The neural network doesn’t “read” the individual objects in the background, but sees them as one because of the spoon.

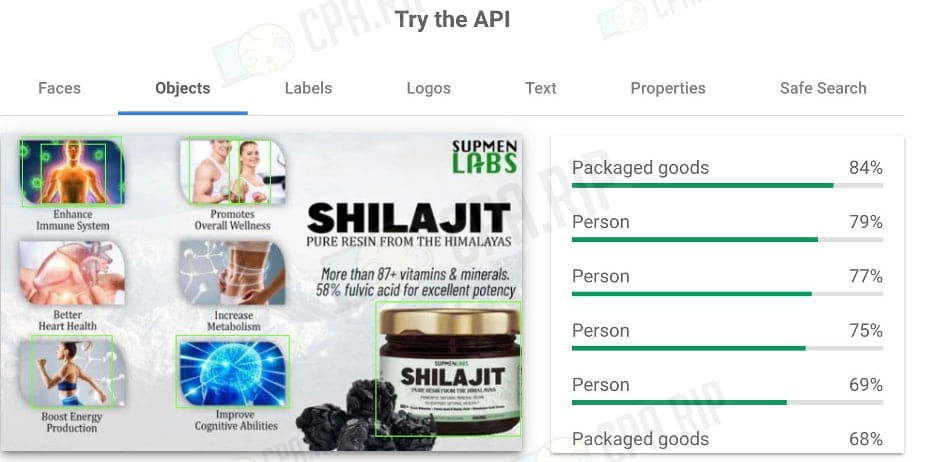

The neural network gets lost if there are multiple small images in the creative, so the score is not always objective. For example:

Strangely, the neural network evaluates an obviously medical image only by 2 points: there is a pack with a drug, organs, and people.

But the “Objects” tab makes it clear why VisionAI passes the creative. Artificial intelligence sees only people and product packaging on it.

Artificial Intelligence is often unable to find relationships between medical tools/procedures that are clearly understandable to humans. As in the case of hemorrhoids:

“Medical” – 2 points, but “Racy” is still 4. The neural network realizes that the image is “racy” but can’t connect it with medicine.

The most harmless medical creatives from the SafeSearch point of view look like this:

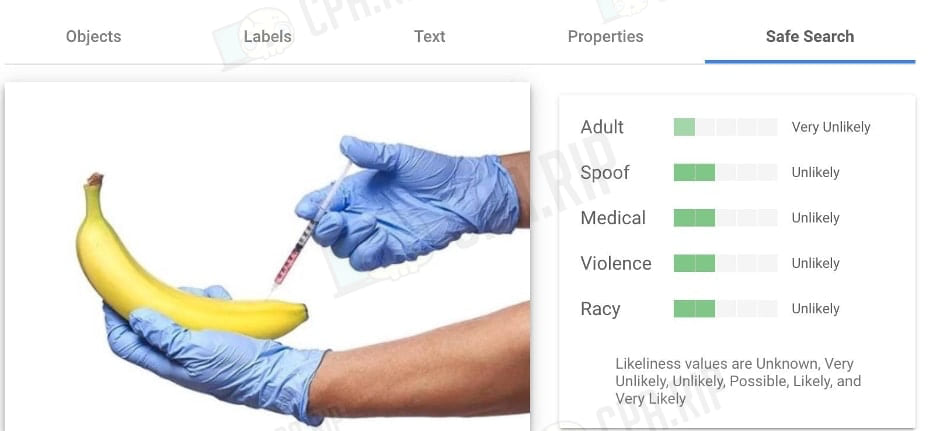

The association with a banana is as old as the world, but the neural network does not yet seem to know how to connect all the elements in the image into a single picture (as the human brain does).

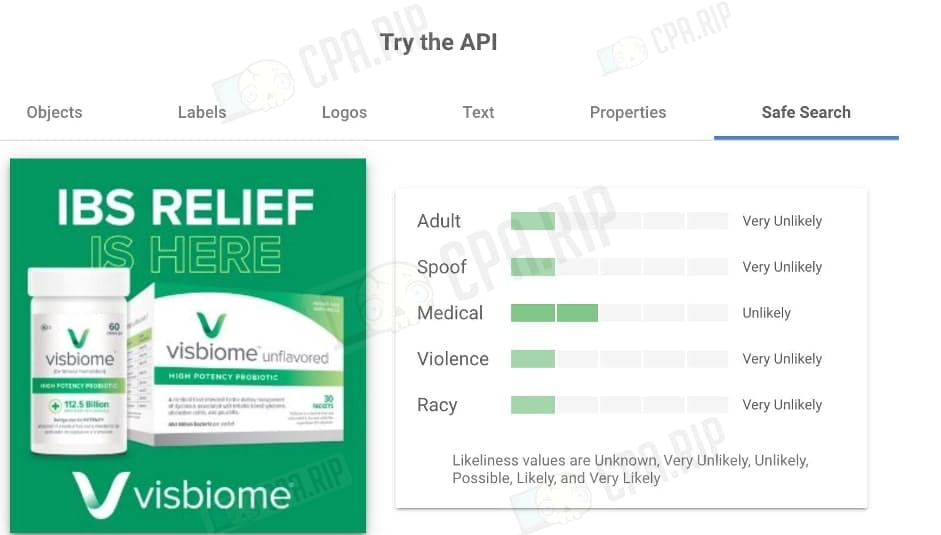

Finally, the easiest option for a creative is a neutral description of the drug package. According to SafeSearch, the image is safe.

Evaluating creatives for violence

It was almost impossible to find creatives that would be rated 5 out of 5 for violence by the neural network.

Even on pictures with UFC fights the service prefers to hang “Spoof”. The same applies to creatives with images of weapons.

How AI detects racy content

The neural network recognizes bare body parts well, immediately giving 4 points out of 5 possible for “Racy”.

What’s more: VisionAI has learned to recognize emotions and environments (male, female, bathroom) to understand context and give 4 points on the “Race” criterion. Even if there are no bare body parts and faces are partially hidden or cropped.

If nudity appears, the “Race” level jumps to a maximum of 5 out of 5 (assessing not only body parts but context as well).

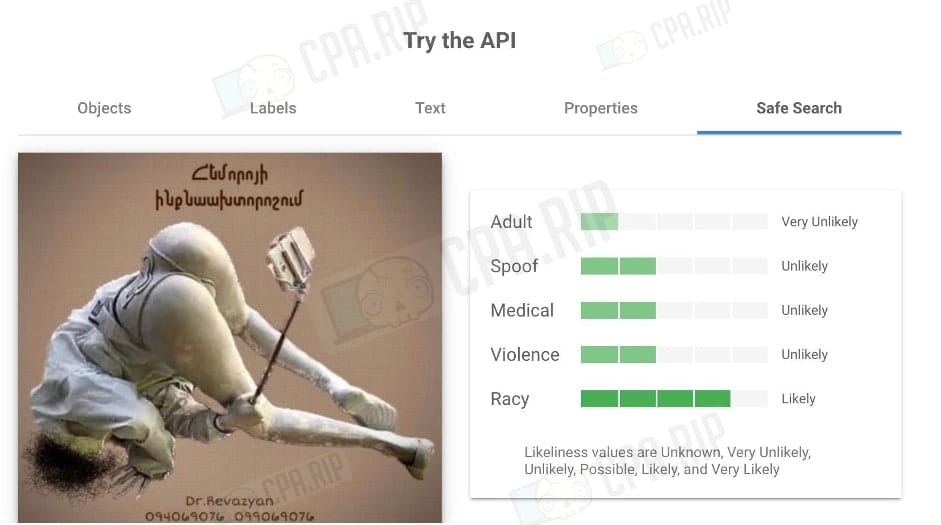

However, the neural network can recognize the racy context even if the main characters are not people. At the same time, the “Racy” criterion score reaches the maximum 5 points out of 5 possible, although the image is much more innocuous than the ones presented above (without naked body parts, demonstration of organs, etc.).

Note that the neural network can recognize even drawn images (illustrations) and schematic notations.

Thus, the neural network most often marks creatives as:

- “Racy” – racy content;

- “Spoof” – deception, misleading, fraud.

SafeSearch with a maximum of 5 points on medicine, adult, and violence are less frequent.

To use or not to use VisionAI to evaluate creatives

It’s hard to predict how Facebook’s algorithms will work. A creative may be passed by one adset but is rejected by another. Lately, affiliates in chats have been complaining about the fact that even harmless and neutral creatives or white offers are rejected.

Using Google Vision is valuable not only for the SafeSearch tab with its five criteria and ratings of undesirable content. The “Objects” section is also interesting.

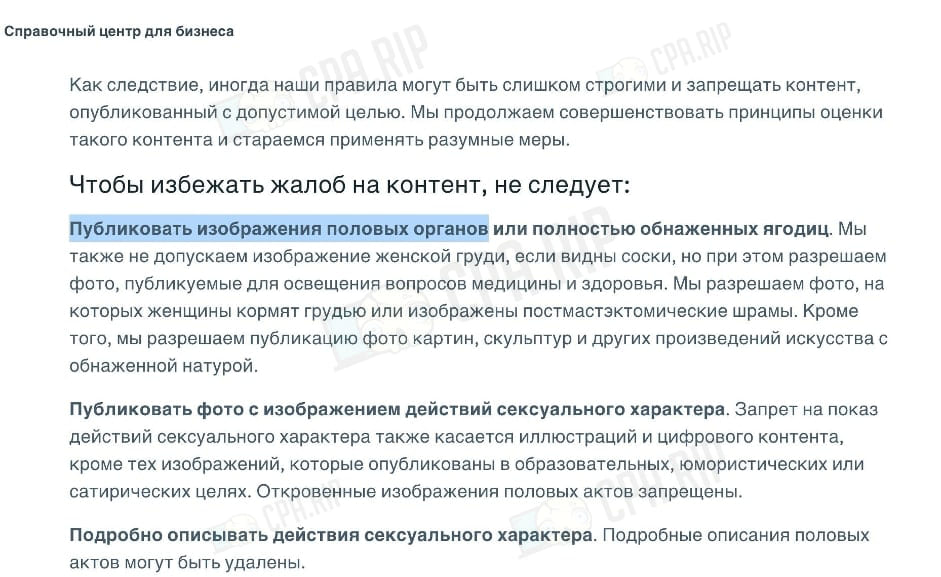

For example, we know that Meta forbids “publishing images of genitals”.

But the bot doesn’t always recognize them (as in the images below).

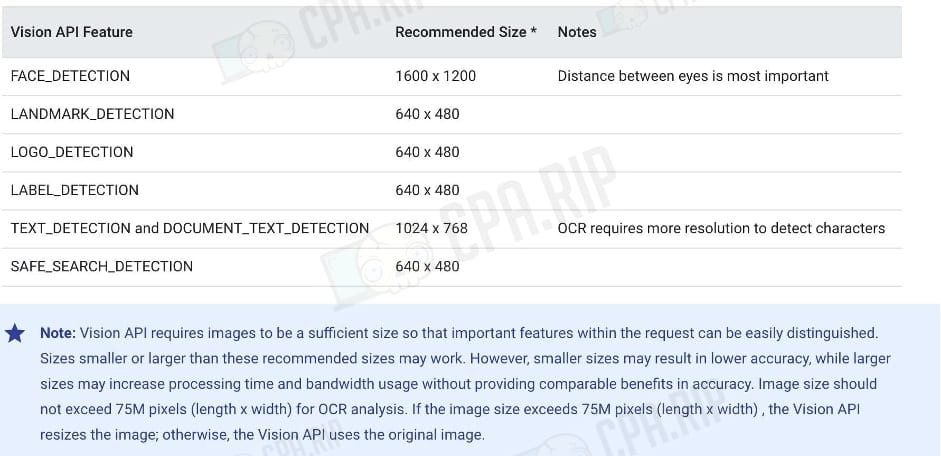

Indirectly, you can guess how the artificial intelligence searches for objects by the image requirements. The service asks for images of 640 x 480 pixels. For face detection, the distance between the eyes is very important, and for character (text) recognition images in high resolution are required.

Detected objects get a mid identifier (machine-generated identifier) on Google Knowledge Graph.

However, you shouldn’t worry about the mandatory 1-2 points on the VisionAI service for creatives. The information provided by Google’s VisionAI should be taken holistically (and in conjunction with other factors).

Naturally, Google does not disclose the info on what models the artificial intelligence is trained on and what specific parameters are used for setting “scores”. If the corporation reveals all the algorithms and mechanics, there will definitely be those who want to cheat the system.

Conclusions about VisionAI: How to use AI to analyze creatives

- VisionAI is a Google neural network that has gotten pretty good at reading subtext from images like “girl’s face and banana / waffle cone”. At the same time, hidden subtexts in images like “hands and banana” are not yet amenable to AI. Associative thinking is a weakness of artificial intelligence.

- Bananas, cucumbers, ice cream in a waffle cone, grapefruit in a cut – artificial intelligence often fails to recognize it as Racy or Adult.

- Pictures of M + F (even the most innocent ones) can get 2-3 points on the criterion of “racy” by the neural network model. The neural network seems to live with 5-year-old stereotypes (M + F, girl, and money ads).

- Vision can find sexual connotations even in “drawn” and cartoon images.

- Images that receive 3-4 points for “adult” content often have high scores in other categories (Racy and Spoof).

- Creatives with drugs and neutral descriptions (no body parts, etc.) receive 1-3 “medical” scores from Vision. Overall, it considers them harmless.

- Videos with news content about “scientists went to the African tribe to learn the secret of big sizes…” are also ambiguously evaluated by the AI. In videos like https://www.facebook.com/ads/library/?active_status=all&ad_type=all&country=ALL&q=grow%20penis&search_type=keyword_unordered&media_type=all, artificial intelligence can’t see the difference between ads and research and news content.

- Vision can recognize text on creatives, and quite well. OCR Language Support supports more than 50 languages, including Russian, English, Polish, Yiddish, Ukrainian, and Turkish. Some of them are in “Experimental” status (Georgian, Azeri, Uzbek, Kazakh, Mongolian). But there are options to adjust the “layering of backgrounds” so that the user reads the correct message like “GET $2000” and the neural network is satisfied with “20GETOS” (as in the example).

AI doesn’t successfully work with:

- layers and objects in the creative – when there are too many of them, AI gets confused and recognizes them as incorrect;

- associative thinking, fantasy, picture conjuring – artificial intelligence often sees separate objects, but is not able to put them into “one puzzle” as the human brain does;

- numerous small images – the AI loses the essence and does not see the main point of the picture;

- hidden subtext (as in the first creative with gray pants) – neural network recognizes harmless objects like pants, shoelace, and brand logo, but the user sees the whole essence;

- demonstrations of winnings / celebration / fireworks without slot machines interface – AI recognizes joy, but doesn’t see gambling;

- news content formats and scientific research – Meta does not prohibit publications for “educational, humorous or satirical purposes”, but AI is not always able to recognize them;

- “layering” of text and fonts – AI reads text letter by letter, not layer by layer.

Don’t forget that in the pursuit of “whitening creatives” and “fitting to services” there is a risk of losing the interest of the audience. Recommendations in the style of “1-2 points on SafeSearch Detection VisionAI” have the right to exist, but they should be treated with caution.

Google promises that the images uploaded to VisionAI for analysis will not be used for training neural networks. And in general: it will store them (because it is necessary for evaluation), but not for a long time. It will analyze them, give you the result, and immediately delete them.